Last May, I spoke at SMX London on the panel ‘Future-proof your SEO’.

The idea for my presentation arose through some frustration experiences during my various jobs as an inhouse SEO. We would spend 3-4 months crafting a powerful structure, new content types, or SEO features, and one unexpected day, for some technical reason, the code blasts or the page dissapears and guess what? you may not find out about it until it is late. You suddently see the traffic and rankings drop and you start panicking about something being terribly broken. By the time you act and have it fixed, the damage is done.

It is quite remarkable how easily it can all go down the drain when a technical disaster occurs, especially on large website structures. Organic traffic drops and it can be up to three times as hard to get that level of traffic back up again. Google doesn’t like sites that often experience problems as it causes volatility on the serps (search engine results pages).

This is why, in an enterprise SEO environment, I always recommend having SEO monitoring systems in place that can alert you as soon as something is broken.

So I named my deck : ‘Prevent enterprise-level disasters with SEO alerts’. Here’s the slideshare capture in case you don’t want to read the post. If you work on enterprise SEO however, I recommend you to read it as I’m sure you will get some actionable tips:

As SEOs, we tend to go to great lengths to make our websites grow, investing a lot a lot of time, money and efforts into building great foundations, best practice url structures, clever IA, creative content and investing heavily in sophisticated content marketing.

We do all that and more, but we often forget something very important : to bullet-proof our fortress. I personally learned the hard way to keep tabs on monitoring in order to stay on the safe side on SEO. It’s about disaster-prevention.

I structured my presentation into 5 easy-to read, digestible modules:

- Traffic

- Rankings

- Your site architecture and url integrity

- Server health

- Backlinks

1. Monitoring Traffic drops (and spikes)

This one is probably the most obvious, yet I could be that over 80% of in-house enterprise SEOs still do not use it. I used to be one of them. I knew I could get these alerts but I wasn’t setting them up.

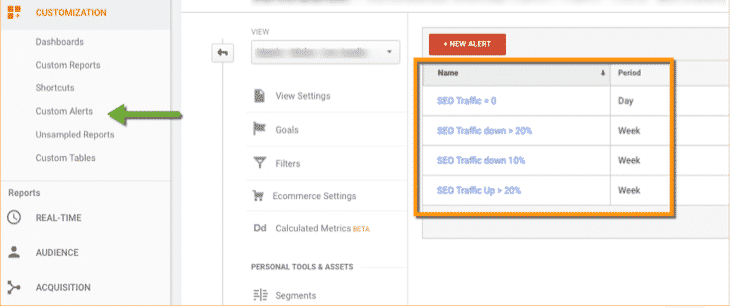

I invite you to go into Google Analytics and set up your first custom alert now. Advice: keep it simple or you’ll soon get inundated with alerts emails :

2. Rankings

How many keywords are you tracking?

How do you label them, group them or prioritize them? I would recommend you to set aside your top 20 -30 keywords, the ones that you could just not leave without ranking, and set up custom alerts in your preferred keyword ranking tool to alert you when you have drastic changes in rankings.

Here’s some examples of alerts you may want to set up:

- Track all keywords on 1st page to ensure they don’t leave that page

- Track all keywords that move from 2nd to 1st page

- Track all top position keywords that leave 1st 5 positions

- Track all keywords that move into the 1st top positions

- Track all 1st or 2nd position keywords (new movers or losers)

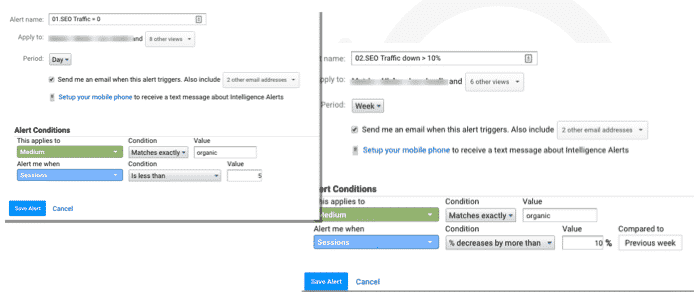

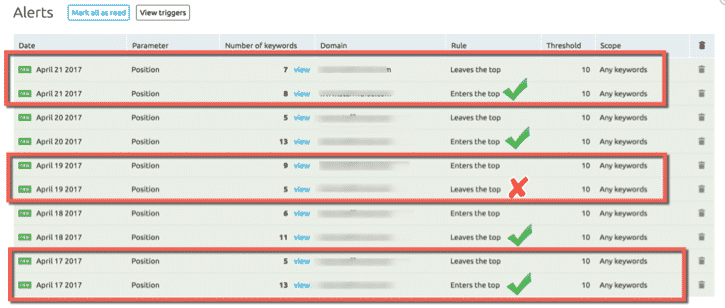

The goal is to be able to get messages like this one when things go off the rails, or when you get sudden ranking surges. It’s also nice to get the good news as alerts of course :

The above is particular is a snapshot of the typical weekly alerts on rankings entering or leaving the 1st page, with SEMrush, which boasts a nice alerting system. It does allow you to get a global picture of all the alerts you may receive in the course of, say, a week.

With this, I aim to get an idea of overall SEO evolution and things moving in the right direction: while I have more keywords moving into Page 1 than No. Of keywords leaving Page 1 I’m fine with it. I usually don’t chase individual keyword rankings unless it’s a special case.

If however, the picture starts looking differently: many more keywords leaving page 1 than entering, then there should be a correlation with other alerts like the Custom traffic alerts on GA, or other ones I will cover in a moment.

I have set up 2 types of ranking fluctuation alerts for each of the top 5 sites in my network :

- Keywords Entering/leaving 1st page

- No. of keywords entering/leaving the Top5 positions

Clarification: It’s not that I get overly obsessed with these alerts. These days, I just look broadly at how my target keyword groups move on to the 1st page, however, as I say, there are always cases when you absolutely must keep tabs on certain keywords, and this is when SEO alerts like this one come useful. Why ?

Most ranking tools these days allow you to set up these kinds of monitoring alerts, at least most of the SEO tool suites.

This is what one of my Alert triggers dashboards looks like :

and this is what I get in my inbox every day :

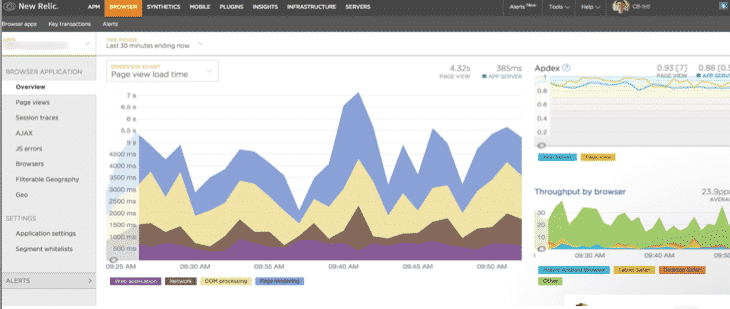

3. Monitor server performance

We all know how important it is to have 100% uptime. But there are other things you can measure apart form uptime.

For example with tools like NewRelic it is easy to spot url groups or specific urls that tend to slow down for some reason.

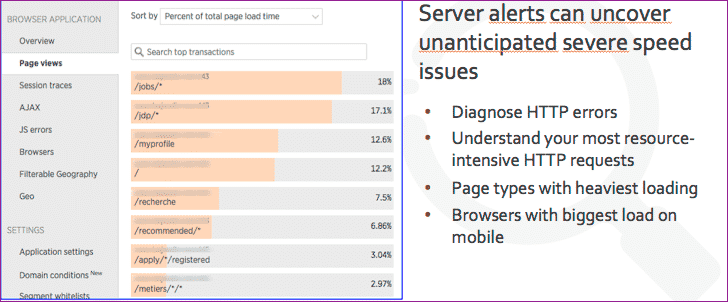

Server alerts can uncover unanticipated speed issues. They can help you :

- diagnose http errors

- identify pages with the heaviest loading on site

- browsers with biggest load on mobile

- site content with a large number of http server requests

I have been using Newrelic together with Dareboost to monitor speed performance and get alerted of slowness issues as they arise.

I really enjoy the alerts system on Dareboost.

4. Monitor website changes (URL structures, robots, sitemaps…)

This one is probably the most important out of all of them. If you are working on large implementations, migrations or heavy SEO projects where up to millions of URLs are at the stake, then you need a tool that can help you compare the ‘before’ and ‘after’ of the work you are leading on.

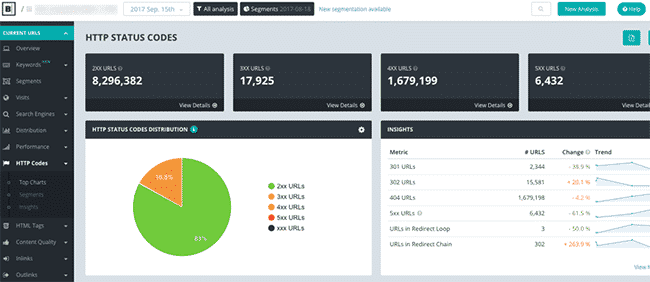

This is about: crawling efficiency, indexation, url data integrity, navigation, crawling errors. Any website larger than 5000 URLs requires special attention to content depth, to scrutinizing your content, to monitoring how much of your content you want it to be crawled.

For this specific type of monitoring I combine usage of the following two tools:

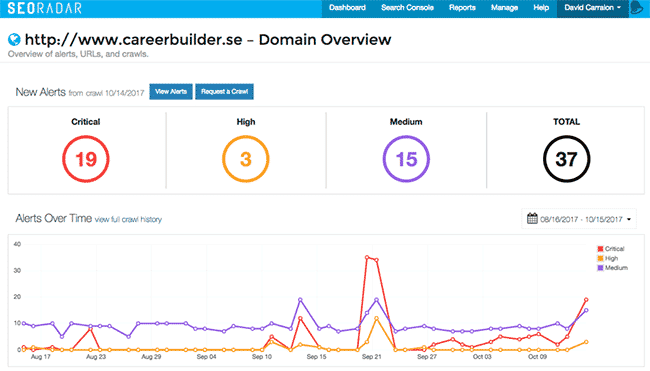

SEO radar is a web change tool. I will quickly explain its value before you may start thinking that this is just one more SEO tool. It’s not.

Botify is a web-based, cloud-powered crawling tool, which I typically use to validate alerts about potential issues on the platform. I use the scheduled crawls functionality to validate alerts from SEO radar that may be flagging an issue.

A lot of things could go wrong within the course or an implementation or while working on a project with multidisciplinary input and objectives. For example an HTML sitemap that may be critical to PageRank-passing across your site may suddenly disappear. How would you know about it? unless you have an automated process that checks the integrity of your website URL structures and alerts you with a handy email or SMS, you may not found it until it is too late.

Outings, issues or malfunctions Typically happen as collateral damage for something else that developers teams may be working on. Perhaps the project you were working on had nothing to do with this HTML sitemap page. But for some technical reason, your valuable PageRank-passing HTML page got crashed.

It’s a type of collateral damage that you need to be aware of immediately. Let’s face it, you cannot be expected to test the entire site data integrity every time you are testing something specific.

With SEO radar, if it’s configured the right way, you will get this type of notifications :

With SEOradar you can get alerts of a variety of different areas:

- meta-tags

- urls structures and paths

- rel canonicals

- sitemaps

- robots

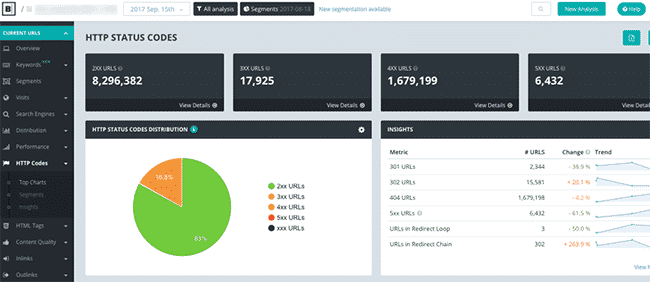

When these type of alert messages land on my inbox and something looks like things are not right, I typically log into Botify and either check the latest automated crawl on the website in question, or if the issue has taken place after the latest crawl, I request a fresh new crawl.

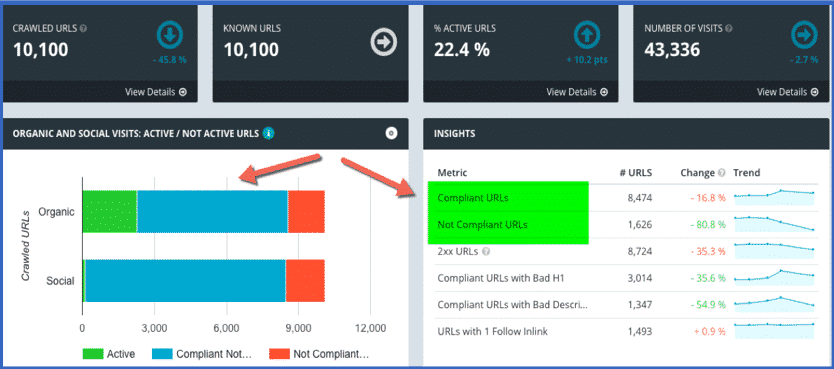

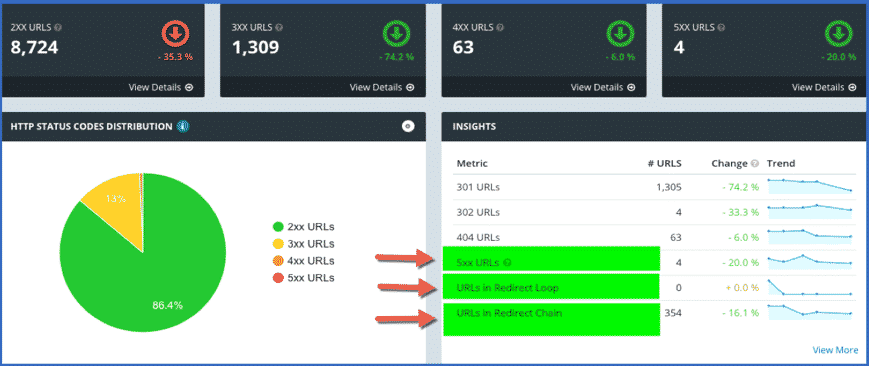

No. Organic Active URLs on site, as well as compliant vs non-compliant urls :

What’s a compliant URL? you may wonder. A compliant URL, as Botify describes it, is one that meets the following four criteria:

- http status code is 200

- Self canonicalises (Equal rel canonical)

- It’s fully indexable (no Meta noindex tag)

- It’s a HTML content type

Have I got more chained redirects as a result of the issue highlighted by SEOradar? I often find the answers for the issues highlighted by SEOradard on one of the Botify crawl reports.

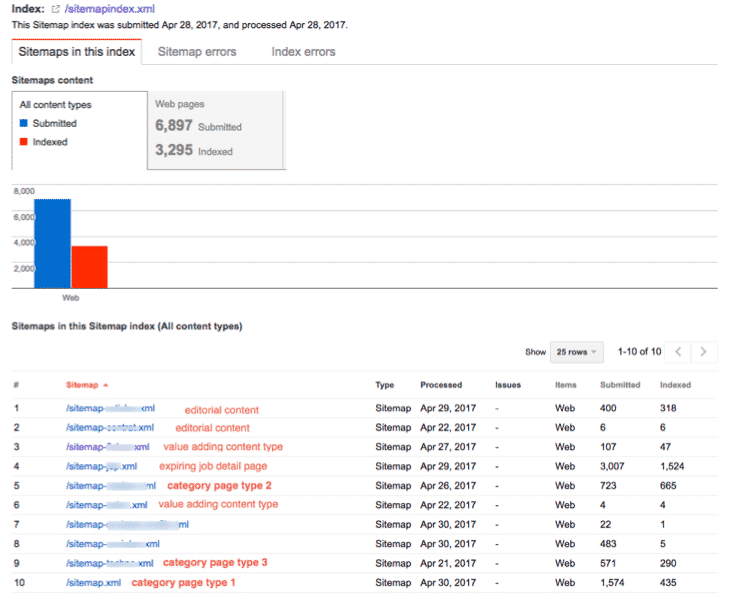

On other report I couldn’t do without is the sitemaps vs urls in the structure report. It’s visual and very clear.

And lastly, if there are alerts flagging issues to do with rankings, traffic or indexation, I would want to check the status of my sitemap’s indexation ratio:

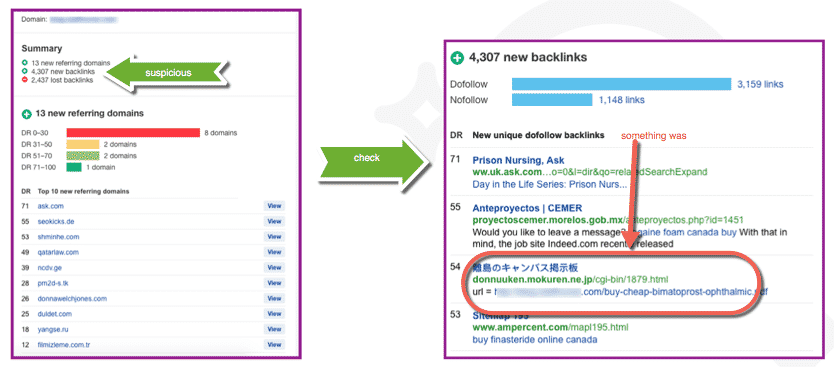

5. Monitor your backlink portfolio

Moving onto the external environment, you will want to make sure that your backlink portfolio stays clean. So you have to keep tabs on your new incoming links. Even in a post-penguin 4 era, there are still a lot of very unethical and egregious marketers out there who’d rather work on debunking you than being more creative with their own SEO strategies.

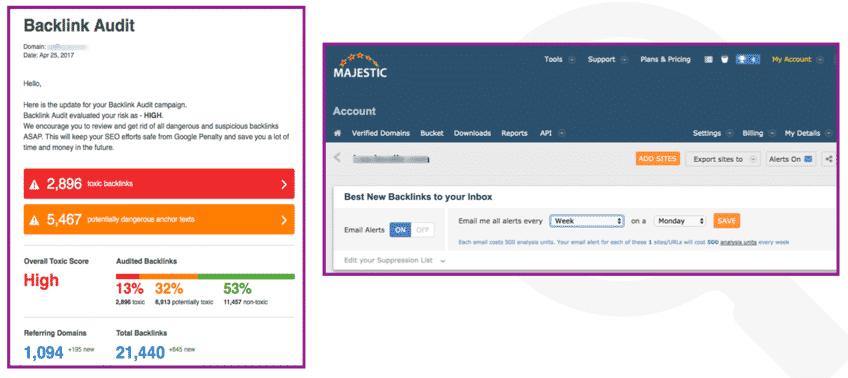

So how do I get alerted of new low quality, spammy or bad links pointing to my site/s ? I use a combination of the following tools :

- SEMrush,

- Ahrefs

- Majestic

- Sistrix

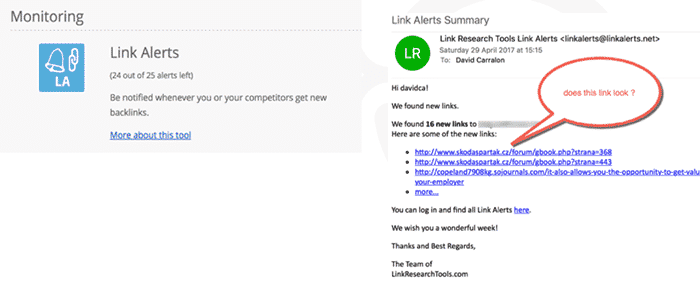

- and, until very recently, LinkResearchTools

I actually used to have it all done by LinkRresearchTools, which allows you to plug in the API of those 4 tools above but since April it practically became unaffordable given the sheer magnitude of the network I was responsible for in my in-house role. Especially if you are dealing with sites that are taking on a lot of negative SEO via incoming spammy links, it quickly amounts up to millions of links.

So, to the point, The tool you will use for this will depend directly on your budget. I do highly recommend Linkresearchtools.com if you can afford it. But you can get useful link alerts with many other tools, eg: Ahrefs which is one of the link audit tools with likely the biggest index currently in the market.

If you look at the detail of it you will see that, from an email alert (left picture), you will likely get alerted that something is not right. Not everyone happens to get 4307 new backlinks easily each week. So following that notice with a click, we can land on the details for the new links where we can see immediately that some of those links are suspicious. This can potentially lead to further investigation and to the conclusion that that site in question may be spammed

Clicking further onto the report ‘Best by links growth, still in Ahrefs, you may get validation that the site’s velocity on link acquisition is unusually high. :

There are of course similar tools that will allow you to get proper alerts and monitoring reports in place to alert you of unnatural new backlinks: SEMrush and Majestic both have a new backlink alert feature:

This is an example of the link alerts I used to get with LRT:

Conclusions

Please note that the checklist of SEO alerts I suggest should not be about measuring but about monitoring for disaster prevention (alerts).

It’s worth reiterating though that this is not about establishing a system to track SEO progress. This is just about preventing SEO disasters. If you are working on something big in your role as in-house SEO and feel that you are investing a large amount of resource, energy and time, consider protecting what you have already built by setting up alerts to keep your SEO equity safe.

Whether you are working in-house, in an agency or as a freelance SEO, if you are responsible for just one or for a large number of websites, I recommend you and actively encourage you to spend some time setting up a number of different alerts that may help you prevent major disasters across the platform you operate onto. Certainly, for enterprise SEO purpose, I believe there is a lot of value in training internal marketing teams to use and access to SEO tools, for routinary tasks such as disavows.

Blueprint for a Successful SEO Strategy

Blueprint for a Successful SEO Strategy

Leave a Reply